Fast and Efficient AWS Lambdas Built With Rust

As Barstool's resident Rustacean, it is my obligation to push for the use of Rust, and to lament about its elegance and power. When running services at scale, my favorite solution is just 'throw it in a lambda', and let AWS worry about handling request load.

As for other ways to trim cost, milliseconds per request is a decent angle for Rust to come in and do some heavy lifting. Now AWS does in fact support Rust as a lambda runtime, for this example I'll be using the fantastic IAC framework: Serverless

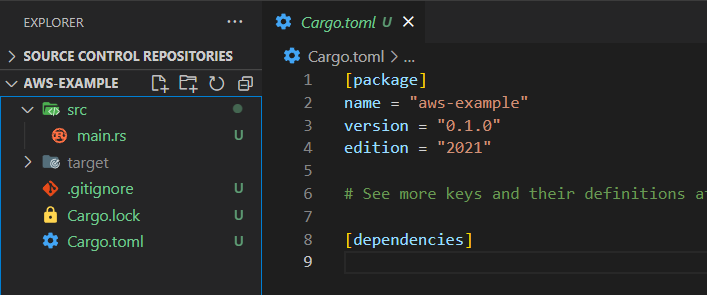

More specifically, the Serverless-Rust plugin. To get started, simply run the cargo command cargo init aws-example to create a new Rust project.

In the Cargo.toml, we are going to add the following new dependencies:

[dependencies]

lambda_http = "0.7.2"

tokio = { version = "1.22.0", features = ["macros", "rt-multi-thread"] }

serde = { version = "1.0.151", features = ["derive"] }

serde_json = "1.0.91"

If you use Rust, you are likely familiar with most of these dependencies, save for

Lambda Http, which adds types and helpers to tranform AWS request events. We will also need to run npm init -y in the root of the project, and use the following package.json

{

"name": "aws-example",

"version": "1.0.0",

"dependencies": {

"serverless": "^3.24.0",

"serverless-rust": "^0.3.8"

}

}

As you can see, we only need the Serverless and Serverless Rust packages as dev-dependencies.

We'll also add a serverless.yml file in the root of the project, and paste the following in:

service: aws-example

frameworkVersion: "3"

provider:

name: aws

runtime: rust

region: us-east-1

versionFunctions: false

memorySize: 2048

timeout: 30

plugins:

- serverless-rust

package:

individually: true

custom:

rust:

target: x86_64-unknown-linux-musl

linker: clang

dockerless: true

I won't go over every line of the custom section, but you can read all about the plugin here.

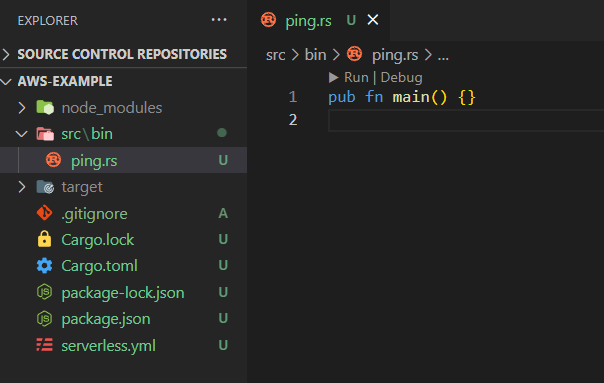

In our project src, create a single rust binary called bin/ping.rs (or whatever you want). Serverless can point to individual rust binaries and deploy them as lambdas that all scale independently. (you can also delete src/main.rs) Our structure now looks like this:

The actual code is very straightforward: we simply make each binary entry-point async using the Tokio macro, and return a response using the Lambda-Http Rust package. For example:

use lambda_http::{

aws_lambda_events::serde_json::json, http::StatusCode, run, service_fn, Error, IntoResponse,

Request, Response,

};

pub async fn ping(_event: Request) -> Result<impl IntoResponse, Error> {

let body = json!({ "message": "sup" }).to_string();

let response = Response::builder()

.status(StatusCode::OK)

.header("Content-Type", "application/json")

.body(body)

.map_err(Box::new)?;

Ok(response)

}

#[tokio::main]

async fn main() -> Result<(), Error> {

run(service_fn(ping)).await

}

You could, of course, make your own structs that have a trait implementation of stringifying &self.

Finally, all we have to do to invoke our binary is add it to our Serverless definition with whatever event we like. The syntax is application-name.binary-name

functions:

ping:

handler: aws-example.ping

# function url

url: true

events:

# v1 REST Api

- http:

method: GET

path: /api/v1/ping

# v2 HTTP Api

- httpApi:

method: GET

path: /api/v2/ping

I've outlined different ways for our lambda to be invoked: v1 Rest Api, v2 HTTP Api, and as a Function Url. The preference is simply up to you!

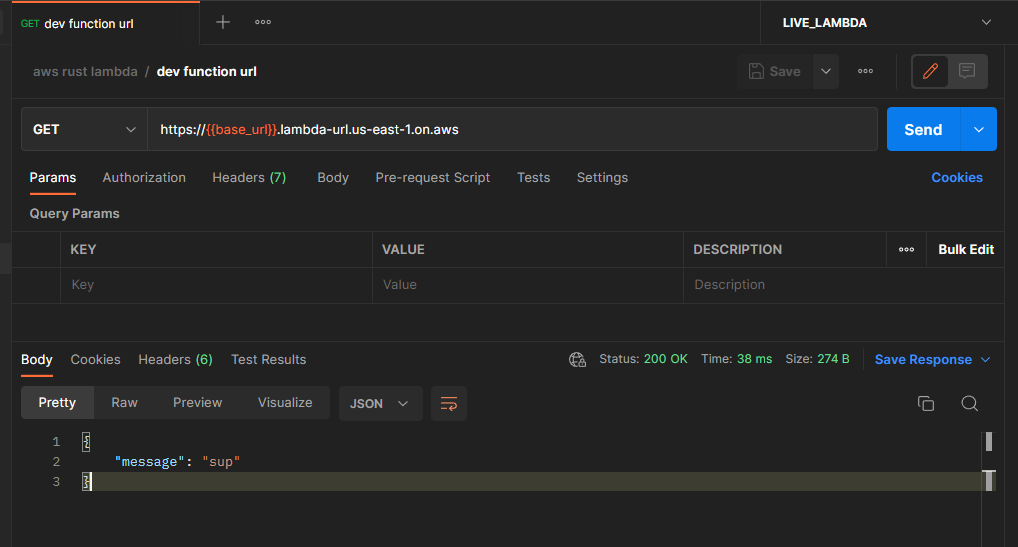

Finally, all that's left is to deploy and invoke our lambda. We can do this through the Serverless cli using npx serverless deploy --stage prod and then hitting one of our three endpoints.

Ta-da! A super fast, scalable, and very resource-efficient service using rust on AWS : )